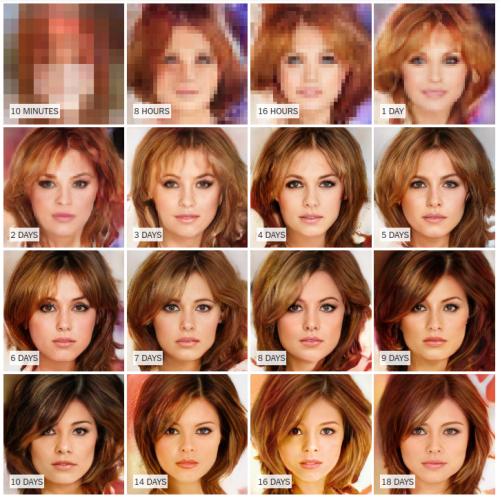

The New York Times is running a very fascinating article on the progress of the artificial intelligence and machine learning in both identifying and generating fake photos – How an A.I. ‘Cat-and-Mouse Game’ Generates Believable Fake Photos. The above image shows the progress of the AI working against itself and learning from its own results – one part is trying to identify if the photo is fake or not, and the other part is trying to generate a fake photo which will pass the test. When the test fails, the system learns, improves, and tries again. Look at the last row of photos, which are super realistic and took the system between 10 to 18 days to learn how to generate.

But that’s not all. It gets better, and I quote:

A second team of Nvidia researchers recently built a system that can automatically alter a street photo taken on a summer’s day so that it looks like a snowy winter scene. Researchers at the University of California, Berkeley, have designed another that learns to convert horses into zebras and Monets into Van Goghs. DeepMind, a London-based A.I. lab owned by Google, is exploring technology that can generate its own videos. And Adobe is fashioning similar machine learning techniques with an eye toward pushing them into products like Photoshop, its popular image design tool.

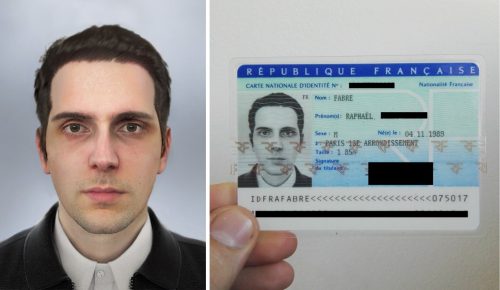

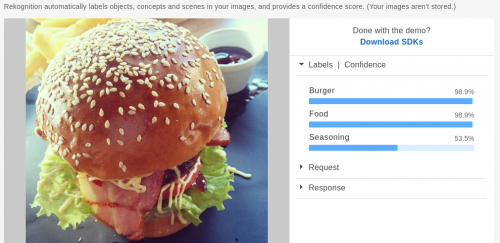

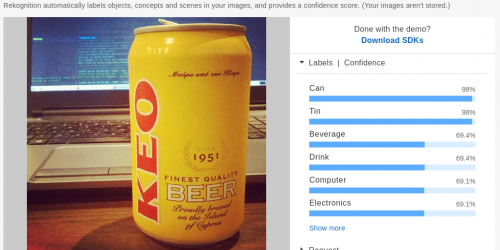

Here are a few more photos that were generated:

This is remarkable. But if you keep reading the article, you’ll quickly discover that there is even more to it. What’s next in line after pictures? You are correct: videos. You better sit down before you watch this video, showing Obama’s lip sync:

So, can’t trust the TV. Can’t trust the Internet. Who do you trust?