“Persisting state between AWS EC2 spot instances” is a handy guide into using Amazon EC2 spot instances instead of on-demand or reserved instances and preserving the state of the instance between terminations. This is not something that I’ve personally tried yet, but with the ever-growing number of instances I managed on the AWS, this definitely looks like an interesting approach.

Tag: Amazon EC2

Amazon AWS : MTU for EC2

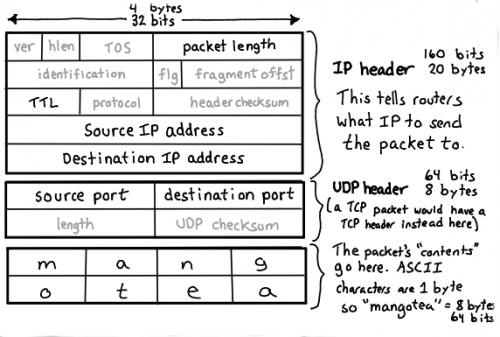

I came across this handy Amazon AWS manual for the maximum transfer unit (MTU) configuration for EC2 instances. This is not something one needs every day, but, I’m sure, when I need it, I’ll otherwise be spending hours trying to find it.

The maximum transmission unit (MTU) of a network connection is the size, in bytes, of the largest permissible packet that can be passed over the connection. The larger the MTU of a connection, the more data that can be passed in a single packet. Ethernet packets consist of the frame, or the actual data you are sending, and the network overhead information that surrounds it.

Ethernet frames can come in different formats, and the most common format is the standard Ethernet v2 frame format. It supports 1500 MTU, which is the largest Ethernet packet size supported over most of the Internet. The maximum supported MTU for an instance depends on its instance type. All Amazon EC2 instance types support 1500 MTU, and many current instance sizes support 9001 MTU, or jumbo frames.

The following instances support jumbo frames:

- Compute optimized: C3, C4, CC2

- General purpose: M3, M4, T2

- Accelerated computing: CG1, G2, P2

- Memory optimized: CR1, R3, R4, X1

- Storage optimized: D2, HI1, HS1, I2

As always, Julia Evans has got you covered on the basics of networking and the MTU.

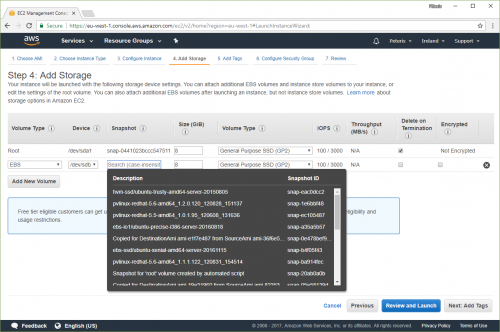

Using Ansible to bootstrap an Amazon EC2 instance

This article – “Using Ansible to Bootstrap My Work Environment Part 4” is pure gold for anyone trying to figure out all the moving parts needed to automate the provisioning and configuration of the Amazon EC2 instance with Ansible.

Sure, some bits are easier than the other, but it takes time to go from one step to another. In this article, you have everything you need, including the provisioning Ansible playbook and variables, cloud-init bits, and more.

I’ve printed and laminated my copy. It’s on the wall now. It will provide me with countless hours of joy during the upcoming Christmas season.

Setting up NAT on Amazon AWS

When it comes to Amazon AWS, there are a few options for configuring Network Address Translation (NAT). Here is a brief overview.

NAT Gateway

NAT Gateway is a configuration very similar to Internet Gateway. My understanding is that the only major difference between the NAT Gateway and the Internet Gateway is that you have the control over the external public IP address of the NAT Gateway. That’ll be one of your allocated Elastic IPs (EIPs). This option is the simplest out of the three that I considered. If you need plain and simple NAT – than that’s a good one to go for.

NAT Instance

NAT Instance is a special purpose EC2 instance, which is configured to do NAT out of the box. If you need anything on top of plain NAT (like load balancing, or detailed traffic monitoring, or firewalls), but don’t have enough confidence in your network and system administration skills, this is a good option to choose.

Custom Setup

If you are the Do It Yourself guy, this option is for you. But it can get tricky. Here are a few things that I went through, learnt and suffered through, so that you don’t have to (or future me, for that matter).

Let’s start from the beginning. You’ve created your own Virtual Private Cloud (VPC). In that cloud, you’ve created two subnets – Public and Private (I’ll use this for example, and will come back to what happens with more). Both of these subnets use the same routing table with the Internet Gateway. Now you’ve launched an EC2 instance into your Public subnet and assigned it a private IP address. This will be your NAT instance. You’ve also launched another instance into the Private subnet, which will be your test client. So far so good.

This instance will be used for translating internal IP addresses from the Private subnet to the external public IP address. So, we, obviously, need an external IP address. Let’s allocate an Elastic IP and associate it with the EC2 instance. Easy peasy.

Now, we’ll need to create another routing table, using our NAT instance as the default gateway. Once created, this routing table should be associated with our Private subnet. This will cause all the machines on that network to use the NAT instance for any external communications.

Let’s do a quick side track here – security. There are three levels that you should keep in mind here:

- Network ACLs. These are Amazon AWS access control lists, which control the traffic allowed in and out of the networks (such as our Public and Private subnets). If the Network ACL prevents certain traffic, you won’t be able to reach the host, irrelevant of the host security configuration. So, for the sake of the example, let’s allow all traffic in and out of both the Public and Private networks. You can adjust it once your NAT is working.

- Security Groups. These are Amazon AWS permissions which control what type of traffic is allowed in or out of the network interface. This is slightly confusing for hosts with the single interface, but super useful for machines with multiple network interfaces, especially if those interfaces are transferred between instances. Create a single Security Group (for now, you can adjust this later), which will allow any traffic in from your VPC range of IPs, and any outgoing traffic. Assign this Security Group to both EC2 instances.

- Host firewall. Chances are, you are using a modern Linux distribution for your NAT host. This means that there is probably an iptables service running with some default configuration, which might prevent certain access. I’m not going to suggest to disable it, especially on the machine facing the public Internet. But just keep it in mind, and at the very least allow the ICMP protocol, if not from everywhere, then at least from your VPC IP range.

Now, on to the actual NAT. It is technically possible to setup and use NAT on the machine with the single network interface, but you’d probably be frowned upon by other system and network administrators. Furthermore, it doesn’t seem to be possible on the Amazon AWS infrastructure. I’m not 100% sure about that, but I’ve spent more time than I had to figure this out and I failed miserably.

The rest of the steps would greatly benefit from a bunch of screenshots and step-by-step click through guides, which I am too lazy to do. You can use this manual, as a base, even though it covers a slightly different, more advanced setup. Also, you might want to have a look at CentOS 7 instructions for NAT configuration, and the discussion on the differences between SNAT and MASQUERADE.

We’ll need a second network interface. You can create a new Network Interface with the IP in your Private subnet and attach it to the NAT instance. Here comes a word of caution: there is a limit on how many network interfaces can be attached to EC2 instance. This limit is based on the type of the instance. So, if you want to use a t2.nano or t2.micro instance, for example, you’d be limited to only two interfaces. That’s why I’ve used the example with two networks – to have a third interface added, you’d need a much bigger instance, like t2.medium. (Which is a total overkill for my purposes.)

Now that you’ve attached the second interface to your EC2 instance, we have a few things to do. First, you need to disable “Source/Destination Check” on the second network interface. You can do it in your AWS Console, or maybe even through the API (I haven’t gone that deep yet).

It is time to adjust the configuration of our EC2 instance. I’ll assume CentOS 7 Linux distribution, but it’d be very easy to adjust to whatever other Linux you are running.

Firstly, we need to configure the second network interface. The easiest way to do this is to copy /etc/sysconfig/network-scripts/ifcfg-eth0 file into /etc/sysconfig/network-scripts/ifcfg-eth1, and then edit the eth1 one file changing the DEVICE variable to “eth1“. Before you restart your network service, also edit /etc/sysconfig/network file and add the following: GATEWAYDEV=eth0 . This will tell the operating system to use the first network interface (eth0) as the gateway device. Otherwise, it’ll be sending things into the Private network and things won’t work as you expect them. Now, restart the network service and make sure that both network interfaces are there, with correct IPs and that your routes are fine.

Secondly, we need to tweak the kernel for the NAT job (sounds funny, doesn’t it?). Edit your /etc/sysctl.conf file and make sure it has the following lines in it:

# Enable IP forwarding net.ipv4.ip_forward=1 # Disable ICMP redirects net.ipv4.conf.all.accept_redirects=0 net.ipv4.conf.all.send_redirects=0 net.ipv4.conf.eth0.accept_redirects=0 net.ipv4.conf.eth0.send_redirects=0 net.ipv4.conf.eth1.accept_redirects=0 net.ipv4.conf.eth1.send_redirects=0

Apply the changes with sysctl -p.

Thirdly, and lastly, configure iptables to perform the network address translation. Edit /etc/sysconfig/iptables and make sure you have the following:

*nat :PREROUTING ACCEPT [48509:2829006] :INPUT ACCEPT [33058:1879130] :OUTPUT ACCEPT [57243:3567265] :POSTROUTING ACCEPT [55162:3389500] -A POSTROUTING -s 10.0.0.0/16 -o eth0 -j MASQUERADE COMMIT

Adjust the IP range from 10.0.0.0/16 to your VPC range or the network that you want to NAT. Restart the iptables service and check that everything is hunky-dory:

- The NAT instance can ping a host on the Internet (like 8.8.8.8).

- The NAT instance can ping a host on the Private network.

- The host on the Private network can ping the NAT instance.

- The host on the Private network can ping a host on the Internet (like 8.8.8.8).

If all that works fine, don’t forget to adjust your Network ACLs, Security Groups, and iptables to whatever level of paranoia appropriate for your environment. If something is still not working, check all of the above again, especially for security layers, IP addresses (I spent a coupe of hours trying to find the problem, when it was the IP address typo – 10.0.0/16 – not the most obvious of things), network masks, etc.

Hope this helps.

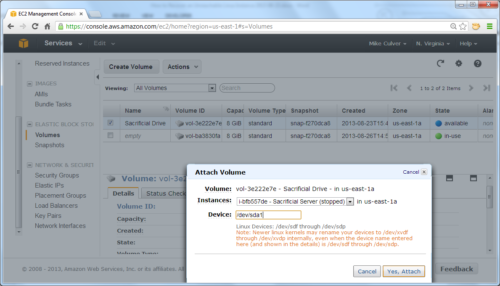

How to Recover an Unreachable EC2 Linux Instance

Here is a tutorial that will come handy one day, in the moment of panic – How to Recover an Unreachable Linux Instance. It has plenty of screenshots and shows each step in detail.

TL;DR version:

- Start a new instance (or pick one from the existing ones).

- Stop the broken instance.

- Detach the volume from the broken instance.

- Attach the volume to the new/existing instance as additional disk.

- Troubleshoot and fix the problem.

- Detach the volume from the new/existing instance.

- Attach the volume to the broken instance.

- Start the new instance.

- Get rid of the useless new instance, if you didn’t reuse the existing one for the troubleshooting and fixing process.

- ???

- PROFIT!