AWSume is a command line tool that makes switching between multiple Amazon AWS profiles really easy and simple.

AWSume is a command line tool that makes switching between multiple Amazon AWS profiles really easy and simple.

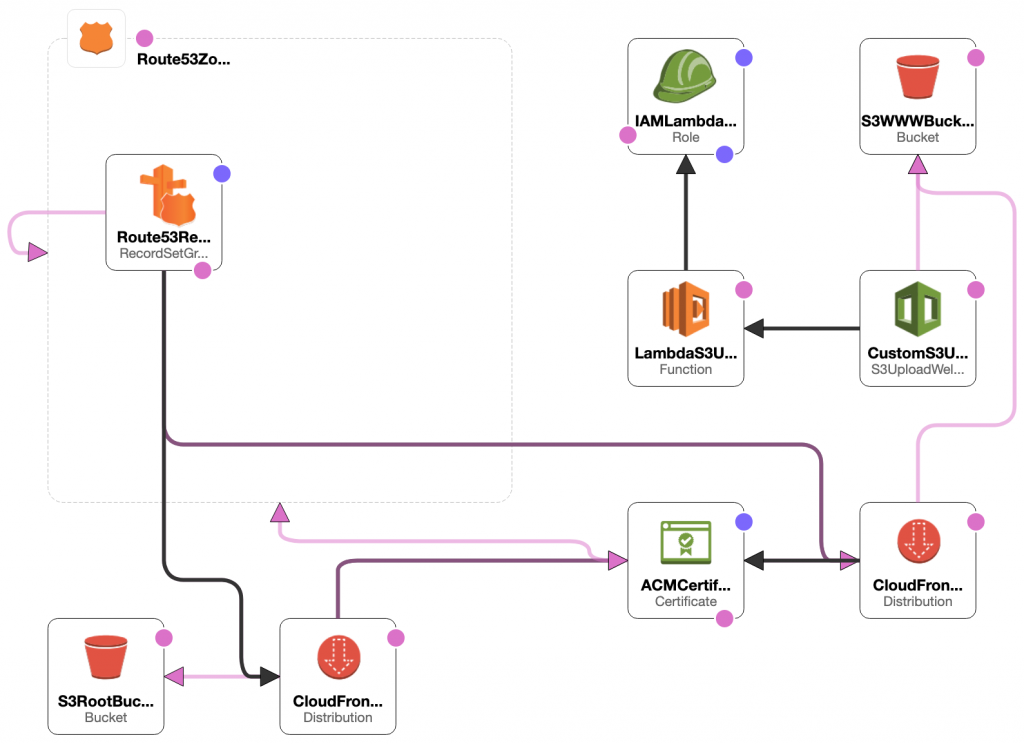

SCAR is a deployment stack for static websites. It’s not exactly a single-click process, but it is as simple as possible. The name is the abbreviation from the Amazon AWS services which are utilized for the deployment: S3, CloudFront, Amazon Certificate Manager, and Route 53.

The whole thing is built via the Amazon CloudFormation, and shouldn’t require much of tinkering with the services or reading lengthy documentation pages. This bit should also motivate you to try it out:

How much will this cost?

For most sites, it will likely cost less than $1 per month. The cost for a Route 53 hosted zone is fixed at $0.50/month; the remaining CloudFront and S3 costs depend on the levels of traffic, but typically amount to a few cents for small levels of traffic.

faast.js is a new framework that makes writing serverless functions super easy. Read more about it in this introductory blog post:

Faast.js started as a side project to solve the problem of large scale software testing. Serverless functions seemed like a good fit because they could scale up to perform work in parallel, then scale down to eliminate costs when not being used. Even better, all infrastructure would be managed by the cloud provider. It seemed like a dream come true: a giant computer that could be as big as needed for the job at hand, yet could be rented in 100ms increments.

But trying to build this on AWS Lambda was challenging:

* Complex setup. Lambda throws you into the deep end with IAM roles, permissions, command line tools, web consoles, and special calling conventions. Lambda and other FaaS are oriented towards an event-based processing model, and not optimized for batch processing.

* Primitive package dependency support. Everything has to be packaged up manually in a zip file. Every change to the code or tests requires a manual re-deploy.

* Native packages. Common testing tools like puppeteer are supported only if they are compiled specially for Lambda.

* Persistent infrastructure. Logs, queues, and functions are left behind in the cloud after a job is complete. These incur costs and count towards service limits, so they need to be managed or removed, creating an unnecessary ops burden.

* Developer productivity. Debugging, high quality editor support, and other basic productivity tools are awkward or missing from serverless function development tooling.

Faast.js was born to solve these and many other practical problems, to make serverless batch processing as simple as possible.

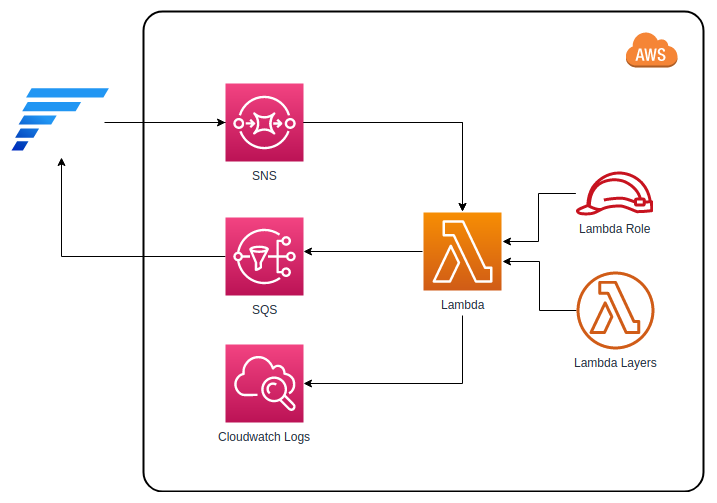

And here’s the quick visualization of the architecture for you.

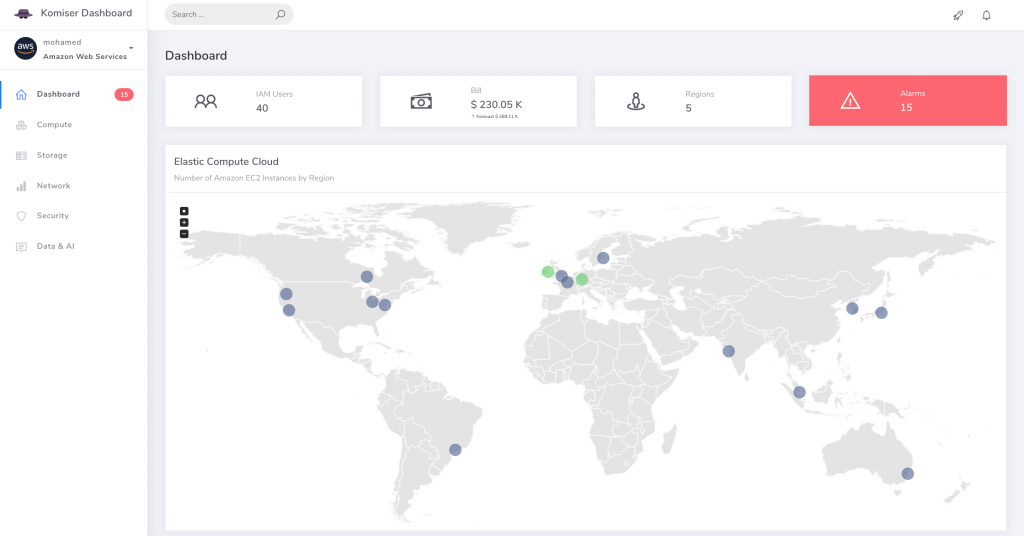

Komiser is a really nice tool that provides an overview of your Amazon AWS setup. After a super simple install, you’ll have a web console which visualizes your AWS regions and the resources you run in them. It’s great for getting a quick overview, as well as for some analyses of billing, security and utilization issues.

aws-securitygroup-grapher is a handy tool that can generate a variety of graphs visualizing Amazon Security Groups. It is implemented as an Ansible role and uses GraphViz to produce the results.

This is particularly useful when you need to get familiar with a complex VPC setup by someone else, or when you want to review the results of an automated setup.