I know, I know, this blog is turning into an Amazon marketing blow-horn, but what can I do? Amazon re:Invent 2016 conference turned into an exciting stream of news for the regular Joe, like yours truly.

This time, Amazon Rekognition is announced, which is an image detection and recognition service, powered by deep learning. This is yet another area traditionally difficult for the computers.

Like with the other Amazon AWS services, I was eager to try it out. So I grabbed a few images from my Instagram stream, and uploaded them into the Rekognition Console. I don’t think Rekognition actually uses Instagram to learn about the tags and such (but it is possible). Just to make it a bit more difficult for them, I’ve used the generic image names like q1.jpg, q2.jpg, etc.

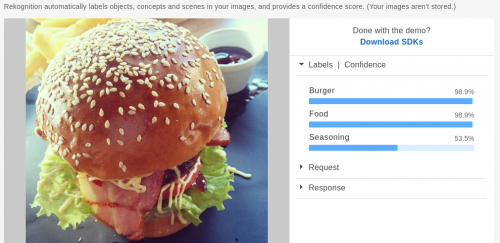

Here are the results. Firstly, the burger.

This was spot on, with burger, food, and seasoning identified as labels. The confidence for burger and food was almost 99%, which is correct.

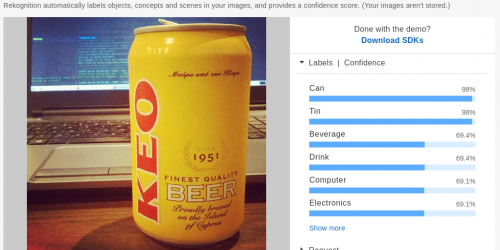

Then, the beer can with a laptop in the background.

Can and tin labels are at 98% confidence. Beverage, drink, computer and electronics are at 69%, which is not bad at all.

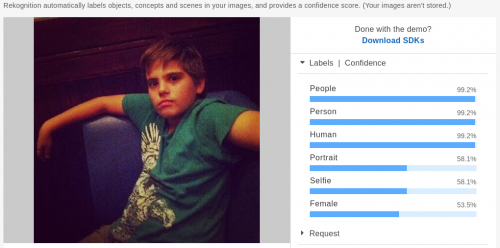

Then I decided to try something with people. Here goes my son Maxim, in a very grainy, low-light picture.

People, person, human at 99%, which is correct. Portrait and selfie at 58%, which is accurate enough. And then female at 53%, which is not exactly the case. But with him being still a kid, that’s not too terrible.

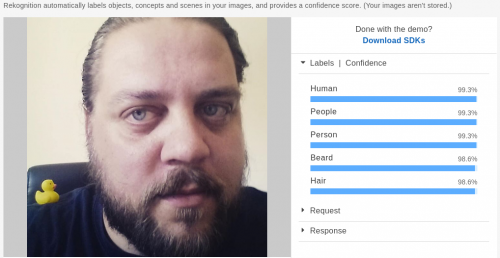

Let’s see what it thinks of me then.

Human, people, person at 99% – yup. 98% for beard and hair is not bad. But it completely missed out on the duck! :) I guess it returns a limited number of labels, and while the duck is pretty obvious, the size of it, compared to how much of the picture is occupied by my ugly mug, is insignificant.

Overall, these are quite good results. This blog post covers a few other cases, like figuring out the breed of a dog and emotional state of people in the picture, which is even cooler, than my tests.

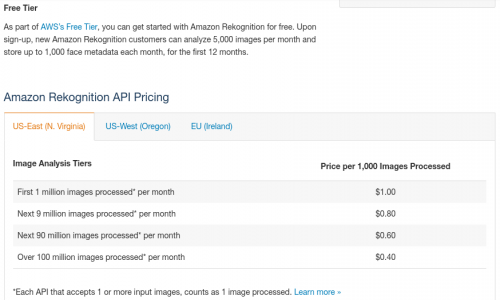

Pricing-wise, I think the service is quite affordable as well:

$1 USD per 1,000 images is very reasonable. The traditional Free Tier allows for 5,000 images per month. And API calls that support more than 1 image per call, are still counted as a single image.

All I need now is a project where I can apply this awesomeness…