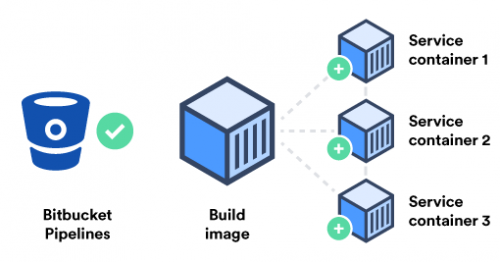

Here are some exciting news from the BitBucket Pipelines blog: Bitbucket Pipelines now supports building Docker images, and service containers for database testing.

We developed Pipelines to enable teams to test and deploy software faster, using Docker containers to manage their build environment. Now we’re adding advanced Docker support – building Docker images, and Service containers for database testing.