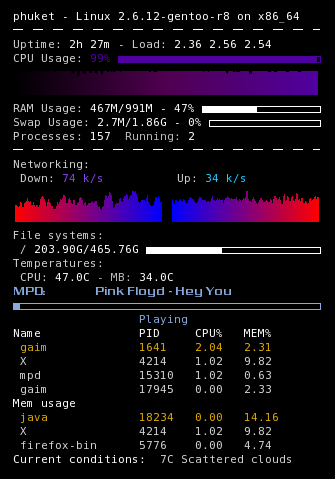

After our recent MySQL migrations, I started getting a weird issue – Zabbix server process was crashing periodically (several times a day).

8395:20161109:175408.023 [Z3005] query failed: [2013] Lost connection to MySQL server during query [begin;]

8395:20161109:175408.024 [Z3001] connection to database 'zabbix_database_name_here' failed: [2003] Can't connect to MySQL server on 'zabbix_database_host_here' (111)

8395:20161109:175408.024 Got signal [signal:11(SIGSEGV),reason:1,refaddr:(nil)]. Crashing ...

Digging around for a bit, it seems like a widely reported issue, related Zabbix server using the same database connection as one of its agents is monitoring (here is an example bug report).

Not having enough time to troubleshoot and fix it properly, I decided for the time being to use another monitoring tool – monit – to keep an eye on the Zabbix server process and restart it, if it’s down. After “yum install monit“, the following was dropped into /etc/monit.d/zabbix:

check process zabbix_server with pidfile /var/run/zabbix/zabbix_server.pid

start program = "/sbin/service zabbix-server start" with timeout 60 seconds

stop program = "/sbin/service zabbix-server stop"

Start the monit service, make sure it also starts at boot, and watch it in action via the /var/log/monit:

[UTC Nov 20 20:49:18] error : 'zabbix_server' process is not running

[UTC Nov 20 20:49:18] info : 'zabbix_server' trying to restart

[UTC Nov 20 20:49:18] info : 'zabbix_server' start: /sbin/service

[UTC Nov 20 20:50:19] info : 'zabbix_server' process is running with pid 28941

The chances of both systems failing at once are slim, so I think this will buy me some time.