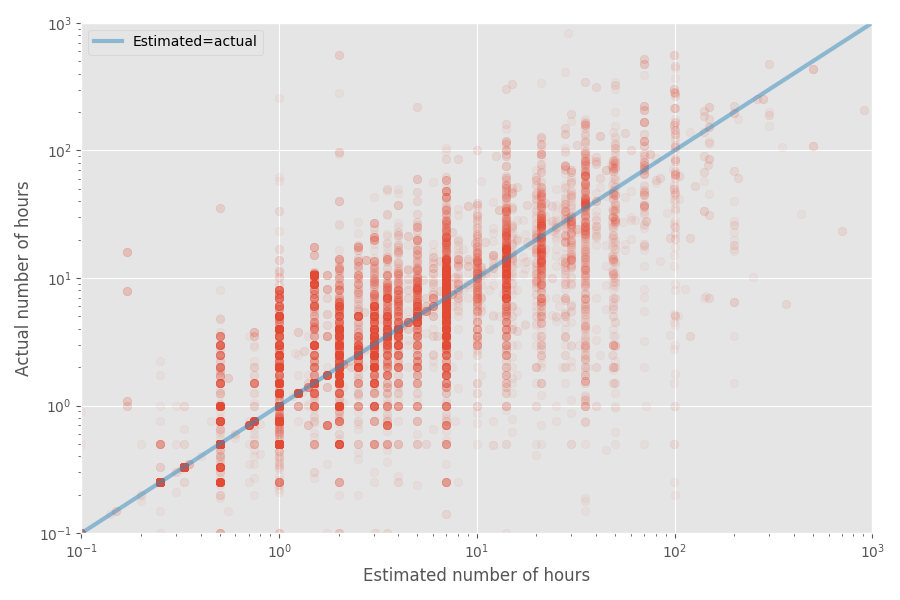

“Why software projects take longer than you think – a statistical model” is an interesting take on the problem of bad estimations in software projects. I’m not that great with math, but even then the article is very interesting. And there is a lot that I agree with.

Here’s a quote for those of you who couldn’t make it through:

Why software tasks always take longer than you think

Assuming this dataset is representative of software development (questionable!), we can infer some more numbers. We have the parameters for the t-distribution, so we can compute the mean time it takes to complete a task, without knowing the σ for that task is.

While the median blowup factor imputed from this fit is 1x (as before), the 99% percentile blowup factor is 32x, but if you go to 99.99% percentile, it’s a whopping 55 million! One (hand wavy) interpretation is that some tasks end up being essentially impossible to do. In fact, these extreme edge cases have such an outsize impact on the mean, that the mean blowup factor of any task ends up being infinite. This is pretty bad news for people trying to hit deadlines!