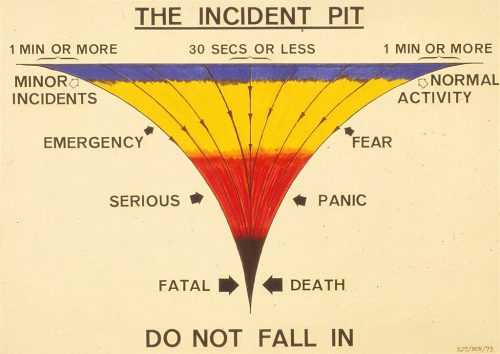

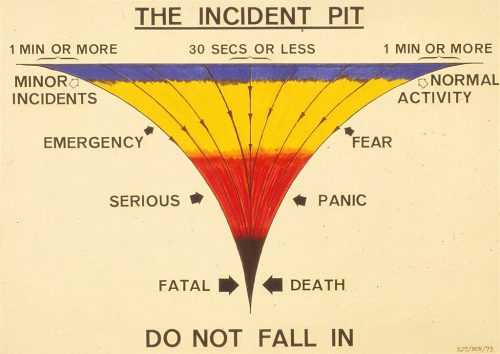

I came across the Wikipedia page for incident pit, which was a concept derived from analyzing multiple incident reports in diving:

The diagram shown is something that has evolved from studying many incident reports. It is important to realize that the shape of the “Pit” is in no way connected with the depth of water and that all stages can occur in very shallow water or even on the surface.

The basic concept is that as an incident develops it becomes progressively harder to extract yourself or your companion from a worsening situation. In other words the farther you become “dragged” into the pit the steeper the sides become and a return to the “normal” situation is correspondingly more difficult.

Underwater swimming may be considered to be an activity where, due to the environment and equipment plus human nature, there is a continuing process of minor incidents – illustrated by the top area of the pit.

When one of these minor incidents becomes difficult to cope with, or is further complicated by other problems usually arriving all at the same time, the situation tends to become an emergency and the first feelings of fear begin to appear – illustrated by the next layer of the pit. If the emergency is not controlled at this early stage then panic, the diver’s worst enemy, leads to almost total lack of control and the emergency becomes a serious problem – illustrated by the third layer of the pit. Progression through to the final stage of the pit from the panic situation is usually very rapid and extremely difficult to reverse and a fatality may be inevitable – illustrated by the final black stage of the pit.

The time for an incident to evolve in this way can be as short as 30 seconds or less, illustrated by the straight line passing directly through all the stages in the centre of the pit, or it may be more a slower process building up over a period of one minute or more [maybe a week!] – illustrated by the curving lines running from the [top] extremities of the pit. In this later case it represents the slowly evolving incident when the diver or group may not be aware that a serious situation is in fact developing. Between 30 seconds and about 1 minute is representative of the time required to take the necessary decisions and actions when it becomes obvious that an incident is about to happen.

The final conclusion is simple: never allow incidents to develop beyond the top normal layer of activity. If you find yourself being drawn into the second stage – the emergency – then use all of your training skill and experience to extract yourself and your companions from the pit before the sides become too steep!

I don’t think is applicable to diving only. Similar incident pits exist in other areas of human activity (technology, business, politics, healthcare, and others come to mind) which involve crisis management. The circumstances and the time frames might be slightly different, but overall, I think, it’s pretty similar.