Great things are easy to get used to. When something works the way you want it, and does so for a long a time, it is inevitable that one day you’ll stop thinking about it altogether and accept it as a given.

Apache was a great web server, until Nginx came along. All of a sudden, it became obvious how much faster things could be, and how much simpler the configuration file is possible.

For the last few years, Nginx was working great for me. And now that I came across Caddy, I realized that life can be a lot simpler.

Here’s a bit to get you started:

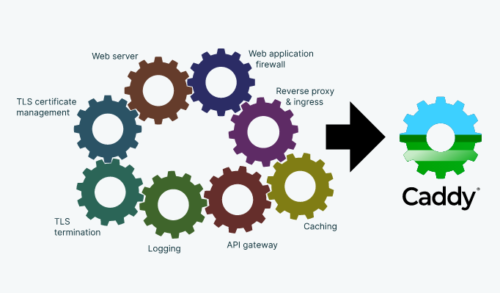

Caddy simplifies your infrastructure. It takes care of TLS certificate renewals, OCSP stapling, static file serving, reverse proxying, Kubernetes ingress, and more.

Its modular architecture means you can do more with a single, static binary that compiles for any platform.

Caddy runs great in containers because it has no dependencies—not even libc. Run Caddy practically anywhere.

Seriously? We now have a web server which handles HTTPS with automatically renewed certificates (yes, Let’s Encrypt) out of the box… Mind-blowing. I guess, 21st century is indeed here now.

What else is there? Well, let’s see:

- Configurable via RESTful JSON API. OMG! No more trickery with include files, syntax checking and restarts. In fact, configuration files are completely optional, and even if you choose to use them, they just use the same API under the hood. Bonus point: you can export full configuration of a running server into a file via a simple API call.

- Extensible. Yes, that’s right! “Caddy can embed any Go application as a plugin, and has first-class support for plugins of plugins.” Check out their forum for plugin-related discussions, or simply search GitHub for Caddy.

- Supports HTTP/1.1, HTTP/2, and even HTTP/3 (ETF-standard-draft version of QUIC), WebSocket, and FastCGI.

- … and a lot more.

Wow! It’s definitely worth checking out. While Nginx is not going to disappear (much like Apache being still around), Caddy might be a better option for your next project.