I have a friend who is a newcomer to the world of WordPress. Until recently, he was mostly working with custom-built systems and a PostgreSQL database engine, so there are many topics to cover.

One of the topics that came up today was the performance of the database engine. A quick Google search brought up the Benchmark plugin, which we used to compare results from several servers. (NOTE: you’ll need php-bcmath installed on your server for this plugin to work.)

My friend’s test server showed a rather poor 48 requests / second result. And that’s on an Intel Core2 Duo E4500 machine with 4 GB of RAM and 160 GB 7200 RPM SATA HDD, running Ubuntu 12.04 x86-64.

So, I tried it on my setup. My setup is all on Amazon EC2, using the smallest possible t2.micro servers (that’s Intel(R) Xeon(R) CPU E5-2670 v2 @ 2.50GHz, with 1 GB of RAM and god knows what kind of hard disk, running Amazon AMI).

First, I ran the benchmark on the test server, which hosts about 20 sites with low traffic (I didn’t want to bring up a separate instance for just a single benchmark run). MySQL runs on the same instance as the web server. And here are the results:

|

Your System |

Industry Average |

| CPU Speed: |

38,825 BogoWips |

24,896 BogoWips |

| Network Transfer Speed: |

97.81 Mbps |

11.11 Mbps |

| Database Queries per Second: |

425 Queries/Sec |

1,279 Queries/Sec |

Secondly, I ran the benchmark on one of the live servers, which also hosts about 20 sites with low traffic. Here though, Nginx web server runs on one instance and the MySQL database on another. Here are the results:

|

Your System |

Industry Average |

| CPU Speed: |

37,712 BogoWips |

24,901 BogoWips |

| Network Transfer Speed: |

133.91 Mbps |

11.15 Mbps |

| Database Queries per Second: |

1,338 Queries/Sec |

1,279 Queries/Sec |

In both cases, MySQL is v5.5.42, running on the /usr/share/doc/mysql55-server-5.5.42/my-huge.cnf configuration file. (I find it ironically pleasing that the tiniest of Amazon EC2 servers fits perfectly for the huge configuration shipped with documentation.)

The benchmark plugin explains how the numbers are calculated. Here’s what it says about the database queries:

To benchmark your database I use your wp_options table which uses the longtext column type which is the same type used by wp_posts. I do 1000 inserts of 50 paragraphs of text, then 1000 selects, 1000 updates and 1000 deletes. I use the time taken to calculate queries per second based on 4000 queries. This is a good indication of how fast your overall DB performance is in a worst case scenario when nothing is cached.

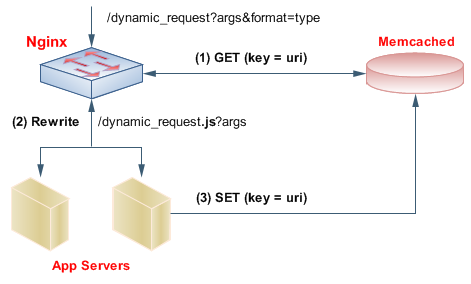

So, it’s a good number to throw around, but it’s far from the realistic site performance, as your WordPress site will mostly get SELECTs, not INSERTs or UPDATEs or DELETEs. And then, you’ll obviously need to see how many SQL queries do you need per page. And then you’ll need to examine all the caching in play – from browser, web server, WordPress, MySQL, and the operating system. And then, and then, and then.

But for a quick measure, I think, this is a good benchmark. It’s obvious that my friend can get a lot more out of his server without digging too deep. It’s obvious that separating web and database server into two Amazon instances gives you quite a boost. And it’s obvious that I don’t know much about performance measuring.