Here goes the story of me learning a few new swear words and pulling out nearly all my hair. Grab a cup of coffee, this will take make a while to tell…

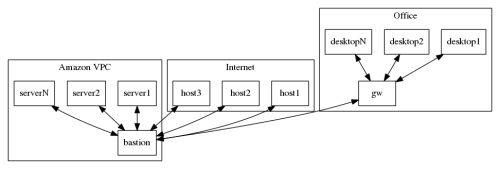

First of all, here is a diagram to make things a little bit more visual.

As you can see, we have an office network with NAT on the gateway. We have an Amazon VPC with NAT on the bastion host. And then there’s the rest of the Internet.

The setup is pretty straight forward. There are no outgoing firewalls anywhere, no VLANs, no network equipment – all of the involved machines are a variety of Linux boxes. The whole thing has been working fine for a while now.

A couple of weeks ago we had an issue with our ISP in the office. The Internet connection was alive, but we were getting extremely high packet loss – around 80%. The technician passed by, changed the cables, rebooted the ADSL modem, and we’ve also rebooted the gateway. The problem was fixed, except for one annoying bit. We could access all of the Internet just fine, except our Amazon VPC bastion host. Here’s where it gets interesting.

Continue reading WTF with Amazon and TCP