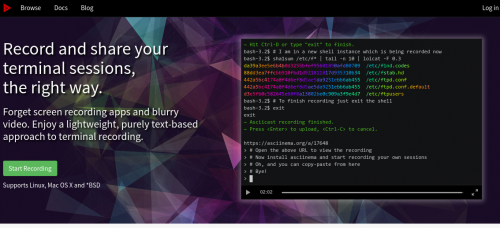

The other day I’ve been puzzled by the results of a cron job script. The bash script in question was written in a hurry a while back, and I was under the assumption that if any of its steps fail, the whole script will fail. I was wrong. Some commands were failing, but the script execution continued. It was especially difficult to notice, due to a number of unset variables, piped commands, and redirected error output.

Once I realized the problem, I got even more puzzled as to what was the best solution. Sure, you can check an exit code after each command in the script, but that didn’t seem elegant of efficient.

A quick couple of Google searches brought me to this StackOverflow thread (no surprise there), which opened my eyes on a few bash options that can be set at the beginning of the script to stop execution when an error or warning occurs (similar to use strict; use warnings; in Perl). Here’s the test script for you with some test commands, pipes, error redirects, and options to control all that.

#!/bin/bash

# Stop on error

set -e

# Stop on unitialized variables

set -u

# Stop on failed pipes

set -o pipefail

# Good command

echo "We start here ..."

# Use of non-initialized variable

echo "$FOOBAR"

echo "Still going after uninitialized variable ..."

# Bad command with no STDERR

cd /foobar 2> /dev/null

echo "Still going after a bad command ..."

# Good command into a bad pipe with no STDERR

echo "Good" | /some/bad/script 2> /dev/null

echo "Still going after a bad pipe ..."

# Benchmark

echo "We should never get here!"

Save it to test.sh, make executable (chmod +x test.sh), and run like so:

$ ./test.sh || echo Something went wrong

Then try to comment out some options and some commands to see what happens in different scenarios.

I think, from now on, those three options will be the standard way I start all of my bash scripts.