Location: Buffalo Wings & Rings – Cyprus

Month: January 2018

17 Tips for Using Composer Efficiently

Martin Hujer has collected 17 tips for using composer efficiently, and then added a few more after receiving the feedback on the blog post. I was familiar with most of these, but there are still a few that are new to me.

Tip #7: Run Travis CI builds with different versions of dependencies

I knew about the Travis CI matrix configuration, but used it only for other things. I’ll be looking into extending it for the composer tests shortly.

Tip #8: Sort packages in require and require-dev by name

This is a great tip! I read the composer documentation several times, but somehow I missed this option. It is especially useful for the the way we manage projects at work (waterfall merges from templates and basic projects into more complex ones).

Tip #9: Do not attempt to merge composer.lock when rebasing or merging

Here, I’m not quite sure about the whole bit on git attributes. Having git try to merge and generate a conflict creates a very visible problem. Avoiding the merge might hide things a bit until they popup much later in the CI. I guess I’ll have to play around with this to make up my mind.

Tip #13: Validate the composer.json during the CI build

This is a great tip! I had plenty of issues with composer validations in the past. Currently, we have a couple of unit tests that make sure that composer files are valid and up-to-date. Using a native mechanism for that is a much better option.

Tip #15: Specify the production PHP version in composer.json

This sounds like an amazing feature which I once again missed. Especially now that we are still migrating some projects from PHP 5.6 to PHP 7.1, and have to sort out dependency conflicts between the two versions.

Tip #20: Use authoritative class map in production

We are already almost doing it, but it’s a good opportunity to verify that we utilize the functionality correctly.

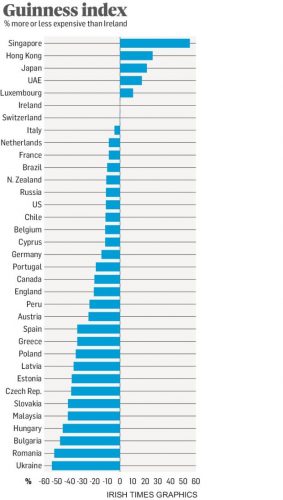

A pint of Guinness gets cheaper when it leaves Ireland

This article examines what happens to the price of the Guinness pint outside of Ireland.

In almost all cases, the price of a pint of Guinness gets cheaper when it leaves Ireland. In some cases the difference is enormous.

Zero-Width Characters

This article shows a couple of interesting zero-width characters techniques for the invisible fingeprinting of text.

In early 2016 I realized that it was possible to use zero-width characters, like zero-width non-joiner or other zero-width characters like the zero-width space to fingerprint text. Even with just a single type of zero-width character the presence or non-presence of the non-visible character is enough bits to fingerprint even the shortest text.

[…]

I also realized that it is possible to use homoglyph substitution (e.g., replacing the letter “a” with its Cyrillic counterpart, “а”), but I dismissed this as too easy to detect due to the differences in character rendering across fonts and systems. However, differences in dashes (en, em, and hyphens), quotes (straight vs curly), word spelling (color vs colour), and the number of spaces after sentence endings could probably go undetected due to their frequent use in real text.

[…]

The reason I’m writing about this now is that it appears both homoglyph substitution and zero-width fingerprintinghave been discovered by others, so journalists should be informed of the existence of these techniques.

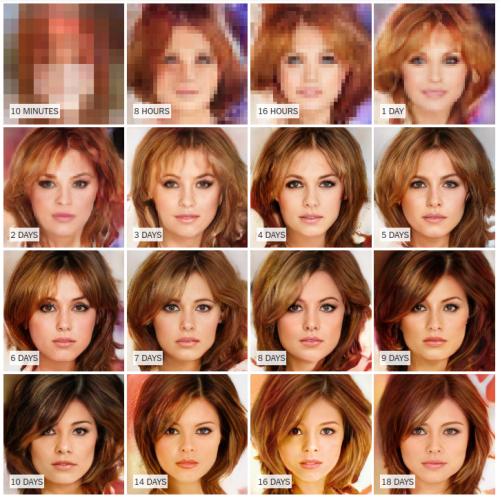

How an A.I. ‘Cat-and-Mouse Game’ Generates Believable Fake Photos

The New York Times is running a very fascinating article on the progress of the artificial intelligence and machine learning in both identifying and generating fake photos – How an A.I. ‘Cat-and-Mouse Game’ Generates Believable Fake Photos. The above image shows the progress of the AI working against itself and learning from its own results – one part is trying to identify if the photo is fake or not, and the other part is trying to generate a fake photo which will pass the test. When the test fails, the system learns, improves, and tries again. Look at the last row of photos, which are super realistic and took the system between 10 to 18 days to learn how to generate.

But that’s not all. It gets better, and I quote:

A second team of Nvidia researchers recently built a system that can automatically alter a street photo taken on a summer’s day so that it looks like a snowy winter scene. Researchers at the University of California, Berkeley, have designed another that learns to convert horses into zebras and Monets into Van Goghs. DeepMind, a London-based A.I. lab owned by Google, is exploring technology that can generate its own videos. And Adobe is fashioning similar machine learning techniques with an eye toward pushing them into products like Photoshop, its popular image design tool.

Here are a few more photos that were generated:

This is remarkable. But if you keep reading the article, you’ll quickly discover that there is even more to it. What’s next in line after pictures? You are correct: videos. You better sit down before you watch this video, showing Obama’s lip sync:

So, can’t trust the TV. Can’t trust the Internet. Who do you trust?