Until now (in fact, even yesterday) I was telling people that Google uses the HTML <title> tag of the given page when displaying search results. Turns out, this is not always true.

Tag: web promotion

WordPress plugin : Google XML Sitemaps 4.0 significant changes

One of the most popular WordPress plugins – Google XML Sitemap – has recently been upgrade to version 4.0, with some significant changes. Here is the quote from the changelog:

New in Version 4.0 (2014-03-30):

- No static files anymore, sitemap is created on the fly!

- Sitemap is split-up into sub-sitemaps by month, allowing up to 50.000 posts per month!

- Support for custom post types and custom taxonomis!

- 100% Multisite compatible, including by-blog and network activation.

- Reduced server resource usage due to less content per request.

- New API allows other plugins to add their own, separate sitemaps.

- Note: PHP 5.1 and WordPress 3.3 is required! The plugin will not work with lower versions!

- Note: This version will try to rename your old sitemap files to *-old.xml. If that doesn’t work, please delete them manually since no static files are needed anymore!

11 ways you can tweet better

- Use a hashtag to drive the conversation.

- Organize a live event using Twitter.

- Tweet the past as if it were the present.

- Have a celebrity take over your account.

- Join the conversation where it takes place.

- You ask the questions.

- Live-tweet a breaking news event.

- Team up with the co-stars and use hashtags to drive fans to a TV show.

- Use Vine videos.

- Use a custom timeline to curate the best content.

- Voting and displaying on air

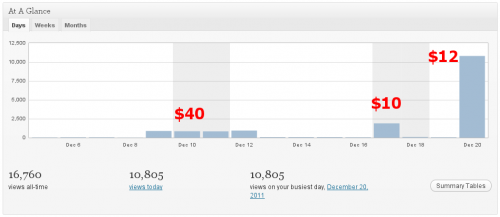

Website traffic, the learning curve

I’ve built plenty of websites over the years. Some – from scratch, others – mere customizations and adaptation of someone else’s work. But when it came to web promotion, I’ve usually handed it over to someone else. Don’t get me wrong – I have a pretty good idea about how these things work, but I didn’t keep up and I haven’t practiced in a long while.

Currently, I am involved in the project, where the web promotion bit is my responsibility. Until the project grows and earns enough to hire a professional. So I’m using it as a platform to refresh my knowledge, catch up with current trends, tools, and techniques, and to try out a few ideas of mine own. It is an interesting experience.

One thing I like is that the website is brand new on a very young domain with no previous history. The A/B testings and statistics cuts are very clean. There is an opportunity to measure the effects of this or that campaign with a lot of precision and no interference from any other traffic sources.

A lot has changed since I did it the last time. One thing that amazes me is how dirt cheap the web traffic is these days. I mean that when I first went in to buy some, I had a price in my head. I paid less and I got more than I expected. Then I studied it for a few days and got a way better price. Then I tried something else and got an even better price. I’m sure I’m not at the end of the tunnel yet either.

Of course, this is a random, not targeted, pretty much not convertable traffic. But it does have its pros this early in the game, and given the price – it’s well worth it. Even with that I’ve got more conversions than I hoped for.

Let me mention it once again – I am pretty much a newbie in the practical terms of this. If you have any advice or any resources that you think might help me out – please share and let me know. Once I get a better hand of it, I’ll share my thoughts and experiences too. Right now though it’s too embarrassing to do so.

Content authorship is a new cool

Here is a quote directly from Google’s Inside Search blog:

We now support markup that enables websites to publicly link within their site from content to author pages. For example, if an author at The New York Times has written dozens of articles, using this markup, the webmaster can connect these articles with a New York Times author page. An author page describes and identifies the author, and can include things like the author’s bio, photo, articles and other links.

If you run a website with authored content, you’ll want to learn about authorship markup in our help center. The markup uses existing standards such as HTML5 (rel=”author”) and XFN (rel=”me”) to enable search engines and other web services to identify works by the same author across the web. If you’re already doing structured data markup using microdata from schema.org, we’ll interpret that authorship information as well.

[…]

We know that great content comes from great authors, and we’re looking closely at ways this markup could help us highlight authors and rank search results.

In simple terms, this means that you should make sure that all your content – no matter where it is published – identifies you as an author. This will help link all your content together, create your author profile, and use that as yet another criteria in ranking and searching. Those of you publishing with WordPress shouldn’t worry at all – adding authorship is either already done or will take a minor modification to the theme. WordPress provided both author pages and XFN markup out of the box for years.