I’ve been hiring, firing, and working with developers of all sorts for the last couple of decades. In those years, I realized that each developer is very unique – their strong and weak sides, knowledge gaps, working rhythm, social interaction, communication abilities, etc. But regardless of how unique each developer is, it is often useful to group them into expertise levels, like junior and senior. Companies do that for a variety of reasons – billing rates, expectations, training required, responsibility, etc.

And this is where things get tricky. One needs a good definition of what a senior developer is (other definitions can be derived from this one one too). There is no standard definition that everybody agrees upon, so each one has their own.

I mostly consider a senior developer to be self-sufficient and self-motivated. It’s somebody who has the expertise to solve, or find ways of solving any kind of technical problems. It’s also someone who can see the company’s business needs and issues, and can find work to do, even if nothing has been recently assigned to him. A senior developer would also provide guidance and mentorship to the junior teammates. I’ve also came to believe that people with the real expertise have no problem discussing complex technical issues in simple terms, but that’s just a side note.

Anyway, recently, I came across this very short blog post, which sent me a spree of pages, charts, and discussions:

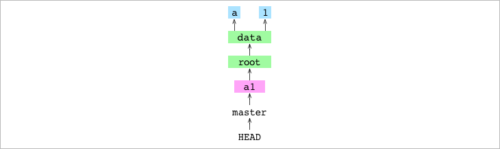

Because of this “What is a senior developer?” conversation on Reddit, I am reminded of the Construx Professional Development Ladders, as mentioned to me long ago by Alejandro Garcia Fernandez. Here is a sample ladder for developers.

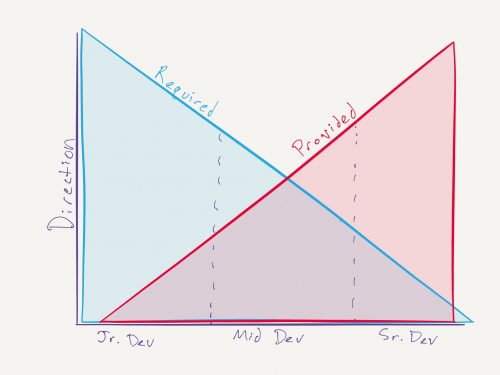

The original article for the Reddit discussion – “The Conjoined Triangles of Senior-Level Development” is absolutely brilliant. In the beginning it provides a chart of the conjoined triangles of senior-level development, which reflects my definition and understanding:

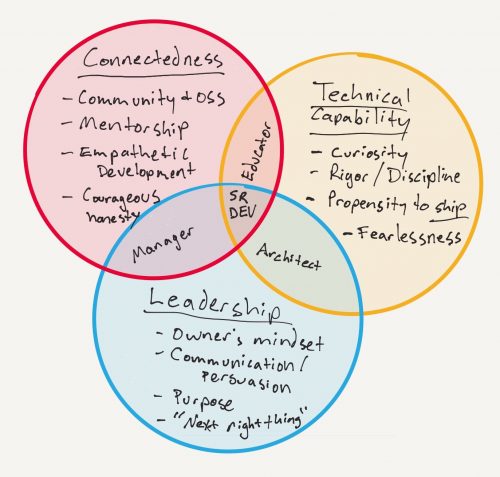

But it doesn’t stop there. It dives deeper into the problem, and, eventually features this Venn diagram:

.. and more. By now, I’ve read the article three times, but I keep coming back to it – it just makes me think and rethink over and over again. Once it settles in my head a bit, I’ll look deeper into the Professional Development Ladder and it’s example application to the senior developer.

Overall, this is a very thought provoking bunch of links.